If you want to disallow or block a sub-directory/folder then do follow the following steps below. Be careful with the rules you put into your robots.txt to disallow any specific directory else a small mistake cake block search engine bots from crawling your website.

What is a sub-directory?

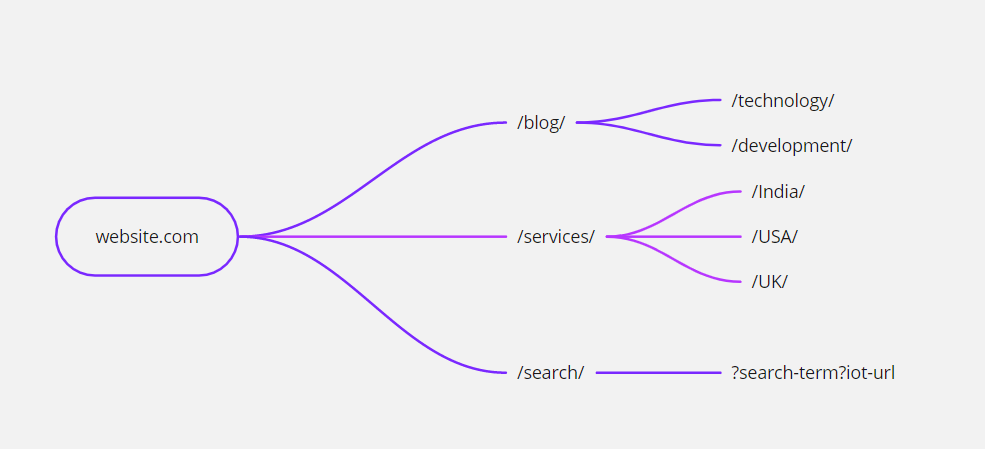

Sub-directory is a directory inside a directory. In simple words, a folder within a folder is called a sub-directory or sub-folder. Let’s take a look at the below image.

Sub-directory URL structure

www.website.com/services/india/page-ulr.html

www.website.com/services/usa/page-ulr.htmlIn above two examples, /india/ and /usa/ are two sub-directories we will block from Robots.txt.

Robots.txt to disallow sub-directories

User-agent: *

Disallow: /services/india/

Disallow: /services/usa/Above Robots.txt will not allow /india/ and /usa/ to crawl but content inside the /services/ directory will be crawled if not set to disallow in a separate syntax.

Robots.txt to disallow search queries

User-agent: *

Disallow: /search*